Calibration of pressure transmitters

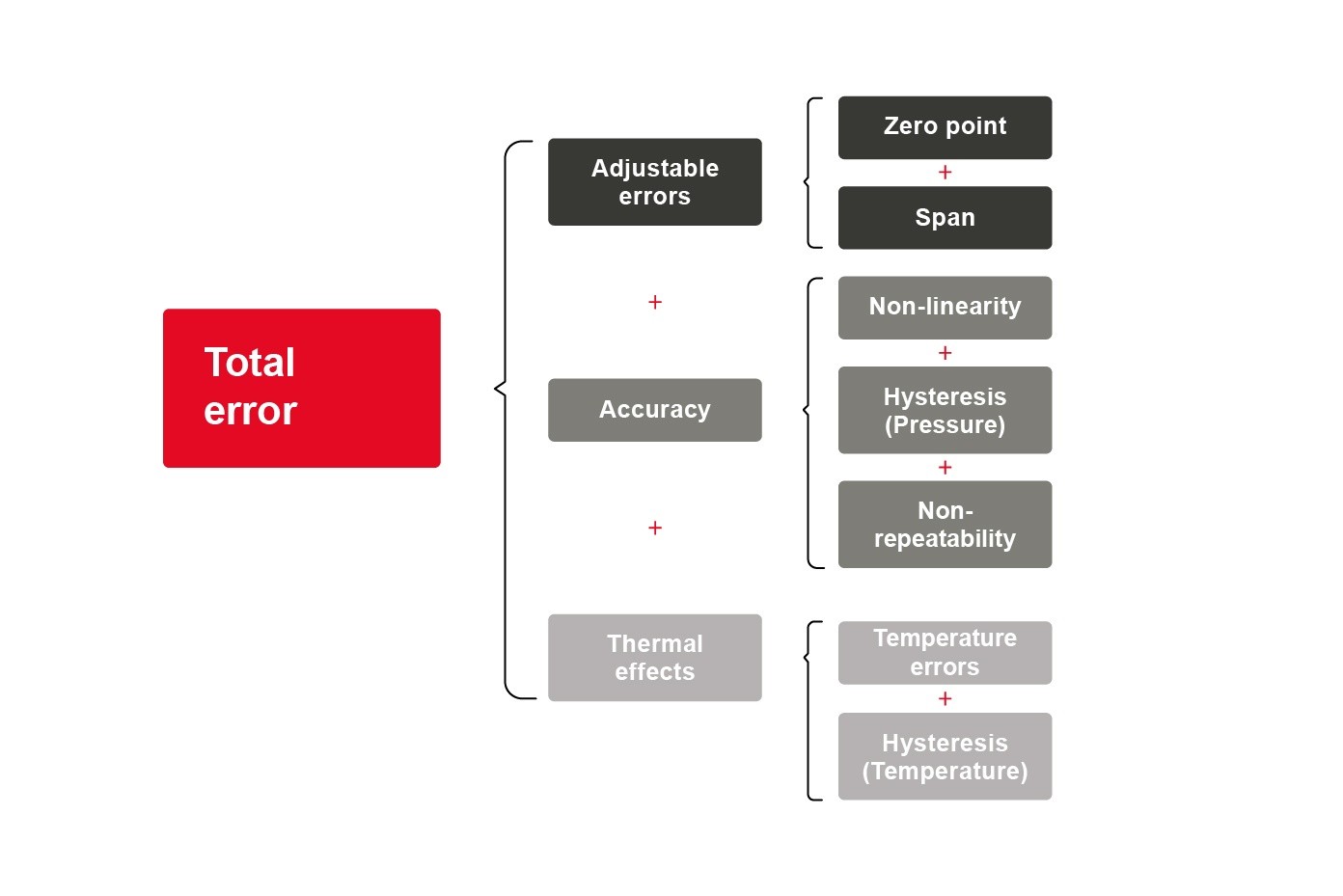

Because of mechanical, chemical or thermal influences, the precision of a measuring device alters over the course of time. This aging process is normal and cannot be overlooked. It is therefore essential to recognize these alterations in good time by means of calibration.

The calibration of pressure gauges is important for various reasons. On the one hand, it is about adherence to established standards such as ISO 9001, to mention just one. Manufacturers, on the other hand, also gain very specific advantages, such as process improvements and cost savings (by using the correct quantities of raw materials, for example). This can prove very worthwhile, since a study performed by the Nielsen Research Company in 2008 shows that the costs of defective calibration to producing companies average 1.7 million dollars per annum. Furthermore, calibration must also be viewed as a central component of quality assurance. In some sectors, such as the chemical industry, consistent and error-free calibrations are also a factor relevant to safety.

Definition: Calibration, adjustment and verification

The terms calibration, adjustment and verification are often used synonymously. All three terms, however, contain significant differences. In the case of calibration, the display of the measuring instrument to be tested is compared to the results from a standard. The standard here is a reference device, the precise function of which remains assured. Using comparative measurements, each measuring device must be capable of being traced back to a national standard through a chain of comparative measurements (“traceability”). For the primary standards, meaning those at the very top of the calibration hierarchy, deadweight testers are generally used for pressure gauges (as are piston manometers), which are employed in national institutes and calibration laboratories.

During adjustment (also termed alignment), an intervention takes place in the measuring device to minimize measurement errors. The intent here is to correct those inaccuracies arising from aging. Adjustment therefore generally precedes a calibration and a direct intervention is performed on the measuring device here. A further calibration is thus also carried out following an adjustment in order to check and document that correction.

Verification involves a special form of calibration. It is always applied whenever the device to be tested is subject to legal controls. This is always the case when accuracy of measurement lies in the public interest. It is also always the case when the measured results have a direct influence on the price of a product. One example here would be the flow meters installed at filling stations. In Germany, validation is the area of responsibility of the National Weights and Measures Office and state-approved test centers.

The calibration of pressure gauges: Requirements

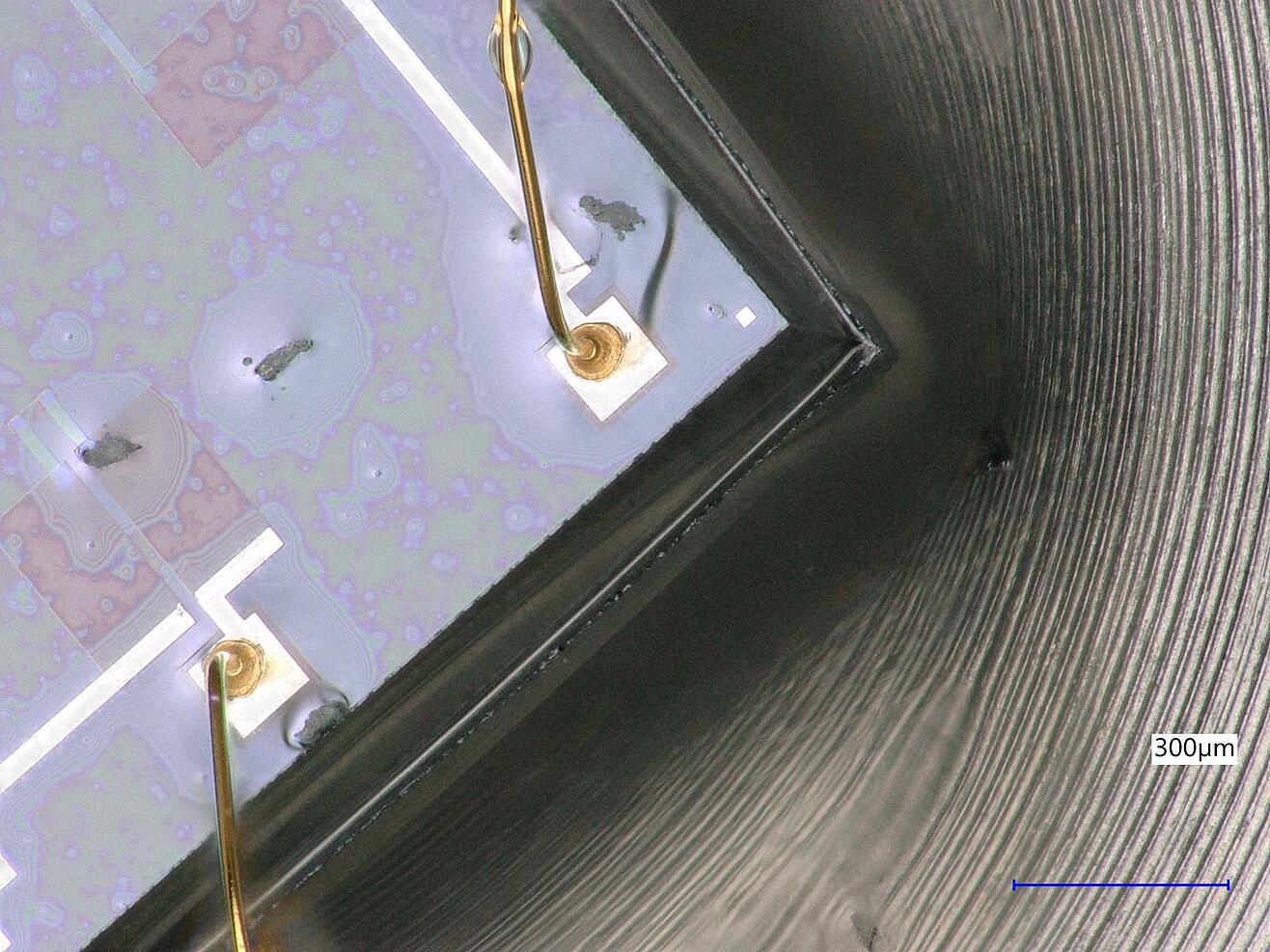

Before calibration, the actual calibration capability of the measuring device must first be determined. The German Calibration Service (DKD) has published the DKD-R 6-1 directive for the calibration of pressure gauges. When calibrating mechanical pressure gauges, the DKD stipulates a number of tests, which are divided into appearance tests (including visual inspection for damage, contamination and cleanliness, visual inspection of labeling) and functional tests (integrity of line system of calibrated device, electrical functionality, faultless function of control elements).

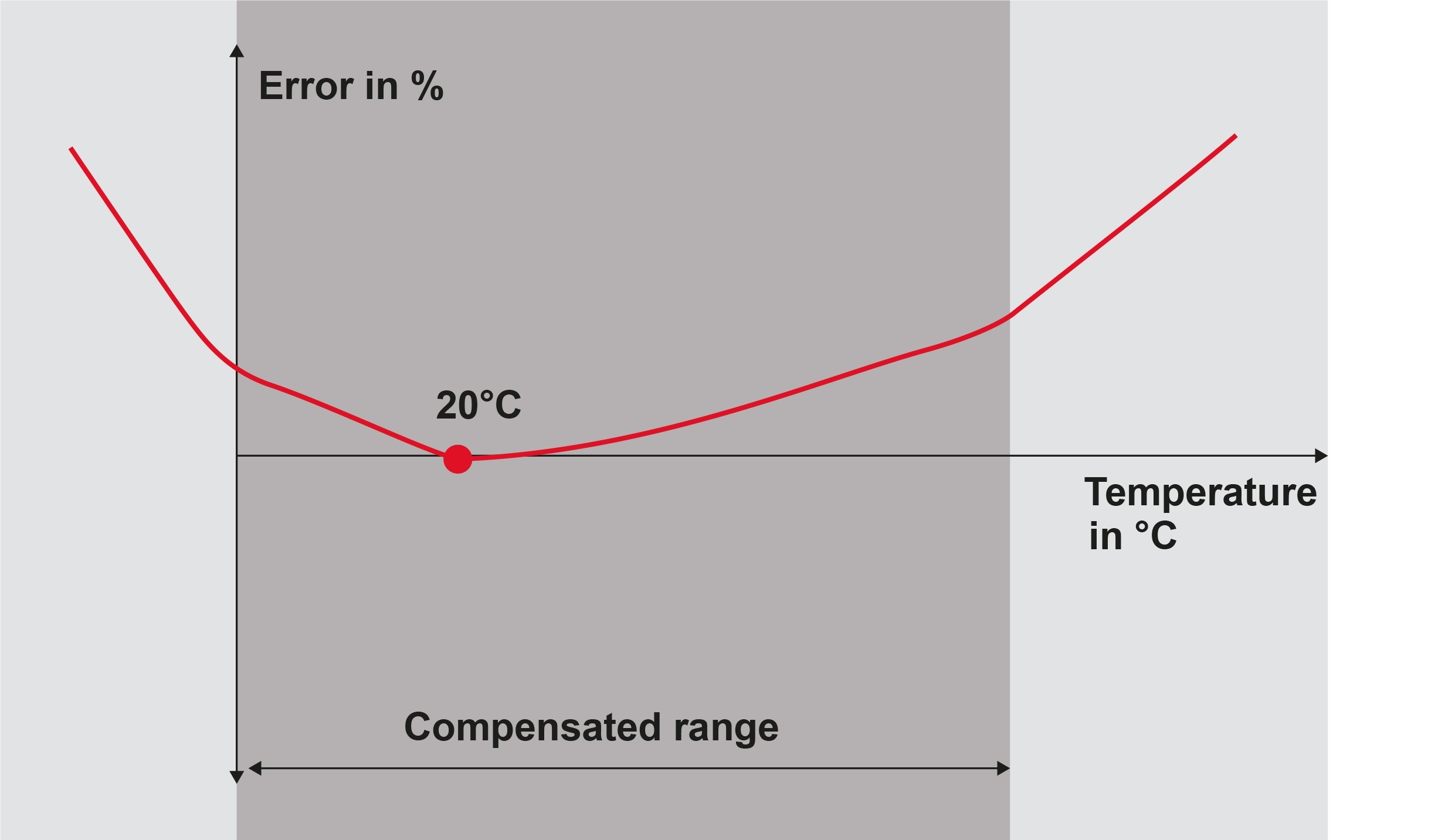

In the next chapter of the DKD-R 6-1 directive, the DKD points out the environmental conditions for calibration, where the calibration is to be performed at a stable environmental temperature. Additionally, it would be ideal if it were carried out under the actual operating conditions of the measuring instrument itself.

The calibration of pressure gauges: Procedure

Once the calibration capability is determined and the environmental conditions are ideal, the actual calibration can then begin. The pressure gauge should preferably be calibrated here as a whole (measuring chain), with the prescribed mounting position also be taken into consideration.

In the DKD-R 6-1 directive of the DKD, different calibration cycles are described for different accuracy classes. At this point, we will limit ourselves to calibration cycle A for the accuracy class of <0.1. This calibration cycle also happens to be the most extensive.

Calibration sequences according to DKD-R 6-1 directive

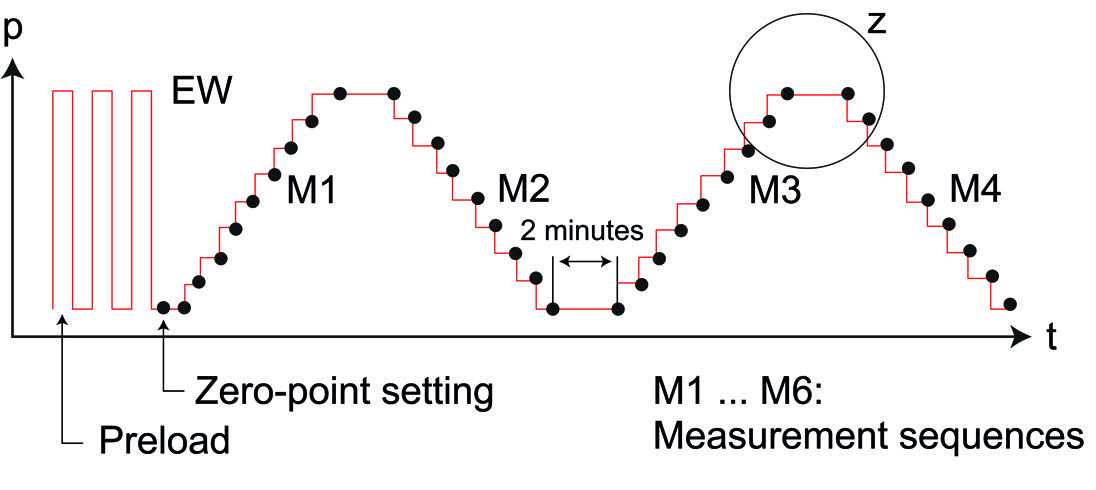

When calibrating devices of accuracy class A, the DKD stipulates three loads up to the full measurement range before the actual measurement sequences are carried out. In each instance, the maximum pressure must be maintained for 30 seconds before being fully released.

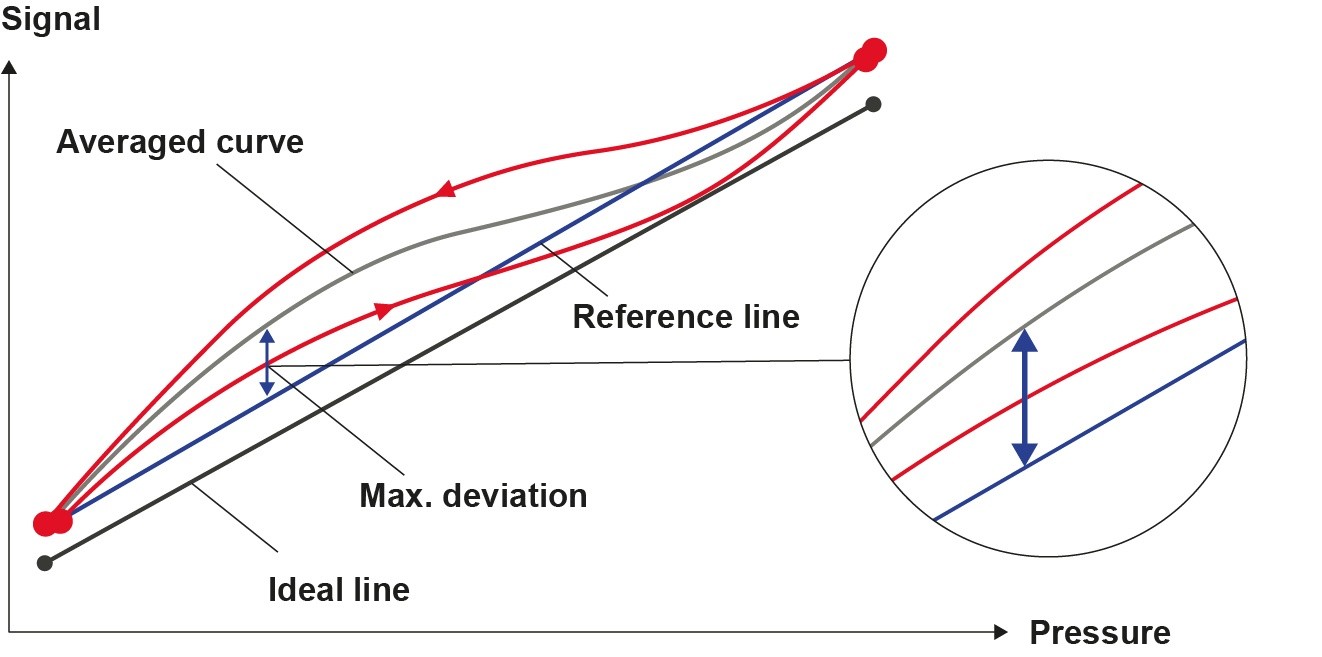

Next, nine points evenly distributed across the measurement range are to be reached by continuous pressure increase. The zero-point is deemed the first measurement point here. The target measuring points have to be reached “from below”. As a result, the pressure increase can only be performed slowly. If a target point is overshot, then the resulting hysteresis leads to a falsification of the results. In this case, the pressure must be drastically reduced in order to reach from below the measurement point to be attained. Once the value is reached, this must also be held for at least 30 seconds before it is actually read.

This process is then repeated for all remaining measurement points. But the final point holds one peculiarity, since this is held for a further two minutes and then read anew and documented.

Once completed, the second stage of the first sequence can begin. This now takes place in reverse, where the individual measurement points are reached from top to bottom. The pressure should be reduced only slowly here so that this time the target value is not undershot. This second measurement sequence ends with a reading at the zero-point.

The second measurement sequence can begin after the meter has been in a pressureless state for three minutes. The cycle of raising and lowering pressure over the individual measuring points is now repeated.

Calibration sequence A according to DKD-R 6-1 directive

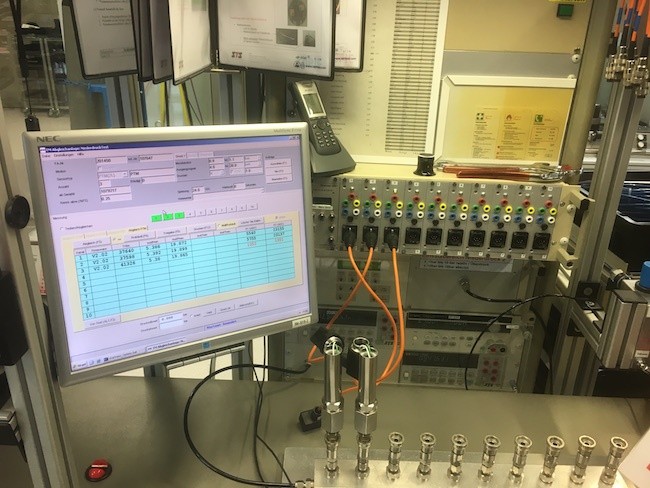

In-house calibration of pressure transmitters

In most industrial applications, calibration by a specialist laboratory is not necessary and often also not practical. For the calibration of pressure gauges on-site, portable pressure calibrators would be suitable. These are not as precise as a deadweight tester, but as a rule are completely sufficient. In these hand-held devices, working standards and pressure generation are combined together. When calibrating a pressure transmitter, a zero-point calibration is carried out with the valves open, once the pressure and electrical connections between the transmitter and test instrument have been established. The individual pressure testing points can then be controlled with the integrated pump. The resulting electrical signals are measured and stored via integrated data loggers, where this data can then be read out on a PC.