Vibrations: The pressure sensor is also affected

In virtually all applications where compressors, turbines and motors come into play, vibrations are to be found, which also affect measurement sensors. Without appropriate precautions, this can impair the functionality of the pressure transducers employed.

The effects of vibration on pressure sensors can be serious: On the one hand, the measurement signal can be disturbed by superimposition. If this vibration is transmitted to the output signal, end users will not receive useful measurement results. This effect can be observed without any delay and a continuous load here can also lead to material fatigue. Welding seams can break apart and threaded connections become loose. Whether through distorted measurement results or broken mechanical connections, vibrations can render pressure sensors inoperable. Fortunately, these undesirable effects can also be largely minimized.

Preventing damage to the pressure measurement system by vibrations

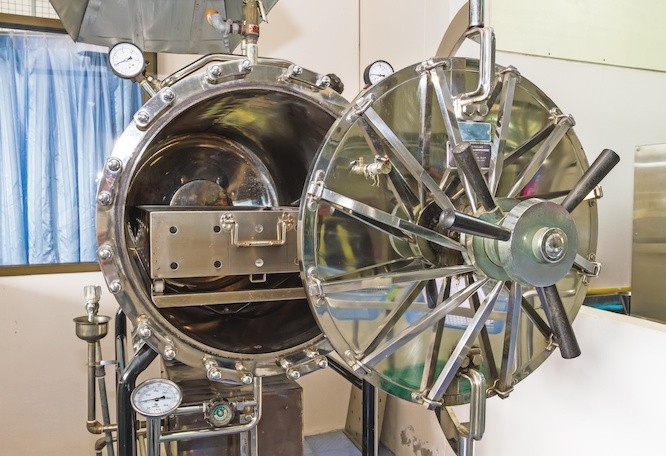

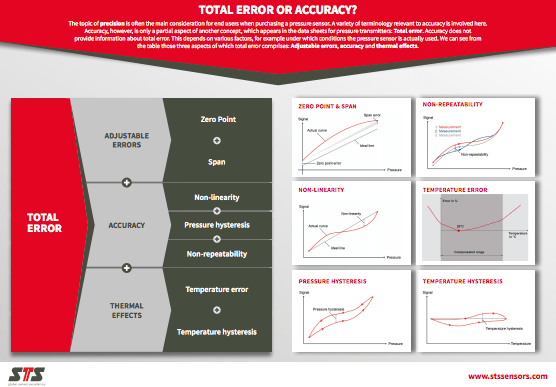

Prevention is the best measure. This requires that users are aware of the vibrations occurring in the respective application. The first step is to determine the vibration frequency of the application. Vibrations do not cause damage per se. In manufacturers’ data sheets, the frequency range in which no interference occurs is often listed under “Tests”. The DIN EN 60068-2-6 standard is applied here, where the test specimen is subjected to a defined frequency range over a predetermined test duration. The aim here is to specify the characteristic frequencies of the test specimen. The actual test procedure is shown in Figure 1.

Figure 1: Qualification of a prototype: Pressure sensor is screwed into an aluminum block that is loaded mechanically (vibration, acceleration)

If strong vibrations arise that exceed the specifications of the pressure sensor, two approaches can be initially considered. The first is concerned with spatial dimension: How big is the pressure transducer and where is it installed? It holds true that the heavier and larger a pressure transducer is, the greater the effect of the vibrations and the lower the resistance. It may thus be advantageous in strongly vibrating applications to use a smaller pressure transmitter, such as the ATM.mini, which suffers little effect from vibrations due to its small mass.

Besides the dimensions of the pressure transducer, its actual position in the application is also decisive. If it sits along the vibration axis, then it will receive less vibration. When it is mounted across the vibration axis, however, it must be able to withstand the full extent of those vibrations.

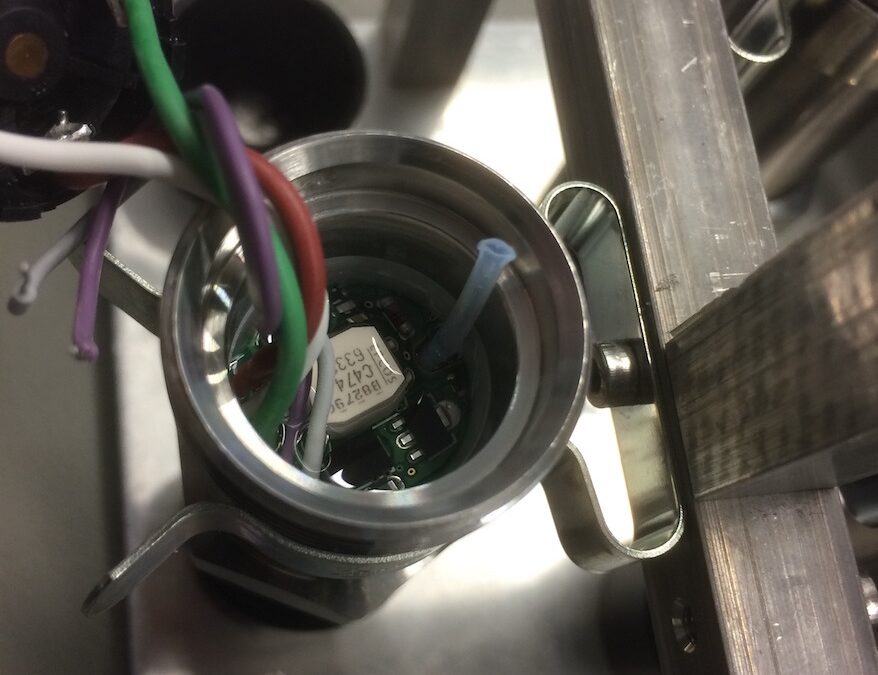

In addition, the pressure transducer itself can be equipped to even better tolerate vibrations. For this purpose, the pressure transmitter is encased in a soft sealing compound, which dampens the vibrations and thus adequately protects the mechanical components. In Figure 2 this sealing compound is seen as transparently glossy.

Figure 2: Pressure sensor with sealing compound

In summary, it can be said that strong vibrations could damage the measurement sensor. By selecting a pressure transmitter suitable for the application (frequency range, dimensions) as well as optimal mounting (along the vibration axis), the effects of any vibrations can be minimized. Further protection is provided by encasing the sensor in a dampening sealer compound (see Figure 2).